The most common question faced by any ETL developer or a Data Warehouse project implementer is always related to performance. Whatever the tool may be, and however fast the job may be running, the end user will always desire something which is a little faster and quicker.

The following tips will help the developer in addressing the performance of an ETL task, developed in Oracle Data Integrator (ODI).

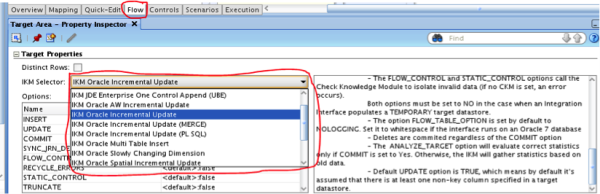

- Select appropriate Knowledge Modules (KMs) to load data. Each knowledge module available is designed for a specific technology. Identify this and use the one that fits best your implementation. If you are performing a simple file to Oracle DB transfer, using native Database utilities like SQLLDR or External Tables will provide an improved performance.

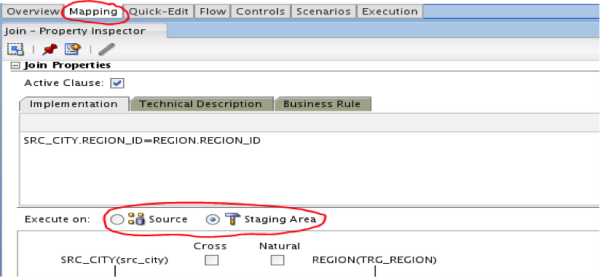

- Execution of joins and filters in the appropriate area provides significant improvement in the performance. If there are more records in the source then setting the filter condition to be executed on source, rather than staging, provides better performance.

- Check where the Agent is running in your setup. If your target is a remote host, it’s optimal to run the Agent on the target host rather than source. However, as an exception, set the Agent on source server if an important amount of data is to be loaded or set the Agent on Staging/Target Server if important transformations are operated on Staging/Target Area.

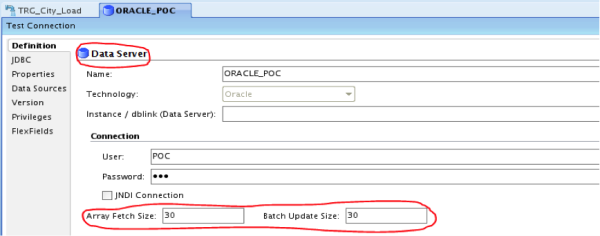

- Increase the Array Fetch Size and Batch Fetch Size of your Data server (so that Agent can accordingly fetch/insert the data).

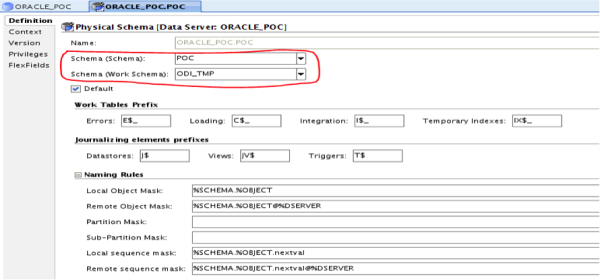

- Pay attention while setting up the Physical Architecture. If the staging and target areas are on the same server then make sure you selected the correct Data Schema and Work Schema for the tasks.

- Enable archival logging on the system and regularly purge logs and archive logs. This will help clean up the metadata tables that use the most storage thereby increasing the start time and execution time of scenarios.

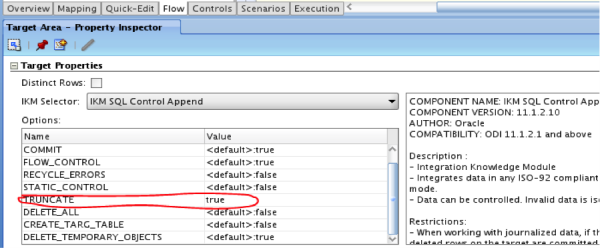

- Temporary tables are a handy tool for debugging during the development process, but remember to set truncate mode ON for temporary tables, while migrating to Production, more specifically during an append process on a table, as these will prove expensive.

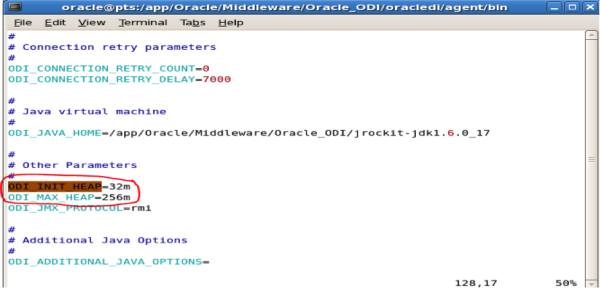

- Setup the JVM heap optimally for a setup, based on the vastness of the project. The main variables to set these up are ODI_INIT_HEAP and ODI_MAX_HEAP and are available in the odiparams.bat (on Windows) and odiparams.sh (on Unix/Linux) file of the ODI installation. Please note that the recommended settings are as follows: ODI_INIT_HEAP = 256M; ODI_MAX_HEAP = 1024M. However, like mentioned earlier in this point, based on the project, tune up and optimize these values for better performance and do not leave them at the default settings.

Other points to consider based on the Oracle Note 423726: “Best Approaches for Performance Optimization Strategies for ODI Scenario Execution”

Correctly Locate the Staging Area:

The Staging Area is usually located on the Target Server, but in some cases, moving the Staging Area to one of the source servers drastically reduces the volumes of data to transfer. For example, if an Integration Interface aggregates a large amount of source data to generate a small data flow to a remote target, then you should consider locating the Staging Area on the source.

The Staging Area is usually located on the Target Server, but in some cases, moving the Staging Area to one of the source servers drastically reduces the volumes of data to transfer. For example, if an Integration Interface aggregates a large amount of source data to generate a small data flow to a remote target, then you should consider locating the Staging Area on the source.

Use the Journalization/Change Data Capture Feature:

This feature can be used to reduce dramatically the volume of source data by reducing the flow to the changed data only. ODI provides two methods for capturing changes, based on trigger or database logs. The method causing less overhead on the operation sources being the log-based CDC and it is the preferred one.

This feature can be used to reduce dramatically the volume of source data by reducing the flow to the changed data only. ODI provides two methods for capturing changes, based on trigger or database logs. The method causing less overhead on the operation sources being the log-based CDC and it is the preferred one.

Select the Best Loading Strategy to Accelerate Data Movement From the Three Main Methods Provided by ODI:

- Data Flowing Through the Agent: When data flows through the Agent, it is usually read on a source connection (typically via JDBC) by batches of records, and written to the target by batches. Array Fetch parameter in the source data server definition defines the size of the batches of records read from the source at a time and stored within the Agent and Batch Update in the target data server definition defines the size of the batches of records written to the target. Further, with a highly available network, you can keep low values (<30, for example) and with a poor network, you can use larger values (100 and more).

- Data Flowing Through Loaders: When loaders are used to transfer data, the Agent delegates the work to external loading programs such as, Oracle SQL*Loader, Microsoft SQL Server BCP, etc.

- Database Engine Specific Methods: These methods include engine-specific loading methods, including Server to Server communication through technologies similar to Oracle Database links(using OCI) or Microsoft SQL Server Linked Servers. File to Table loading specific methods such as Oracle External Tables. Creating AS/400 DDM Files and loading data through a CPYF command. Using an Unloader/Loader method through UNIX pipes instead of files for very large volumes.

Prevent useless joins and unwanted data integrity checks to accelerate data integration to the target by selecting an appropriate integration strategy and integrity process.

- Using an incremental update knowledge module with DELETE TARGET and INSERT options selected. Using a control append knowledge module instead would have the same result with better performance.

- Checking the DISTINCT option for integration when duplicates are not possible or when duplicate records are caused by incorrectly defined joins. Performing a DISTINCT is a time consuming operation for a database engine.

Usage of load balancing provides optimal performance by distributing the execution of sessions over several Agents.

Comments

Post a Comment